For the latest tutorials, visit Weights & Biases on Google Cloud.

Do you want to experiment with Google AI models on Weave without any set up? Try the LLM Playground.

This page describes how to use W&B Weave with the Google Vertex AI API and the Google Gemini API.

You can use Weave to evaluate, monitor, and iterate on your Google GenAI applications. Weave automatically captures traces for the:

- Google GenAI SDK, which is accessible via Python SDK, Node.js SDK, Go SDK, and REST.

- Google Vertex AI API, which provides access to Google’s Gemini models and various partner models.

We also have support for the deprecated Google AI Python SDK for the Gemini API. Note that this support is deprecated as well and will be removed in a future version.

Get started

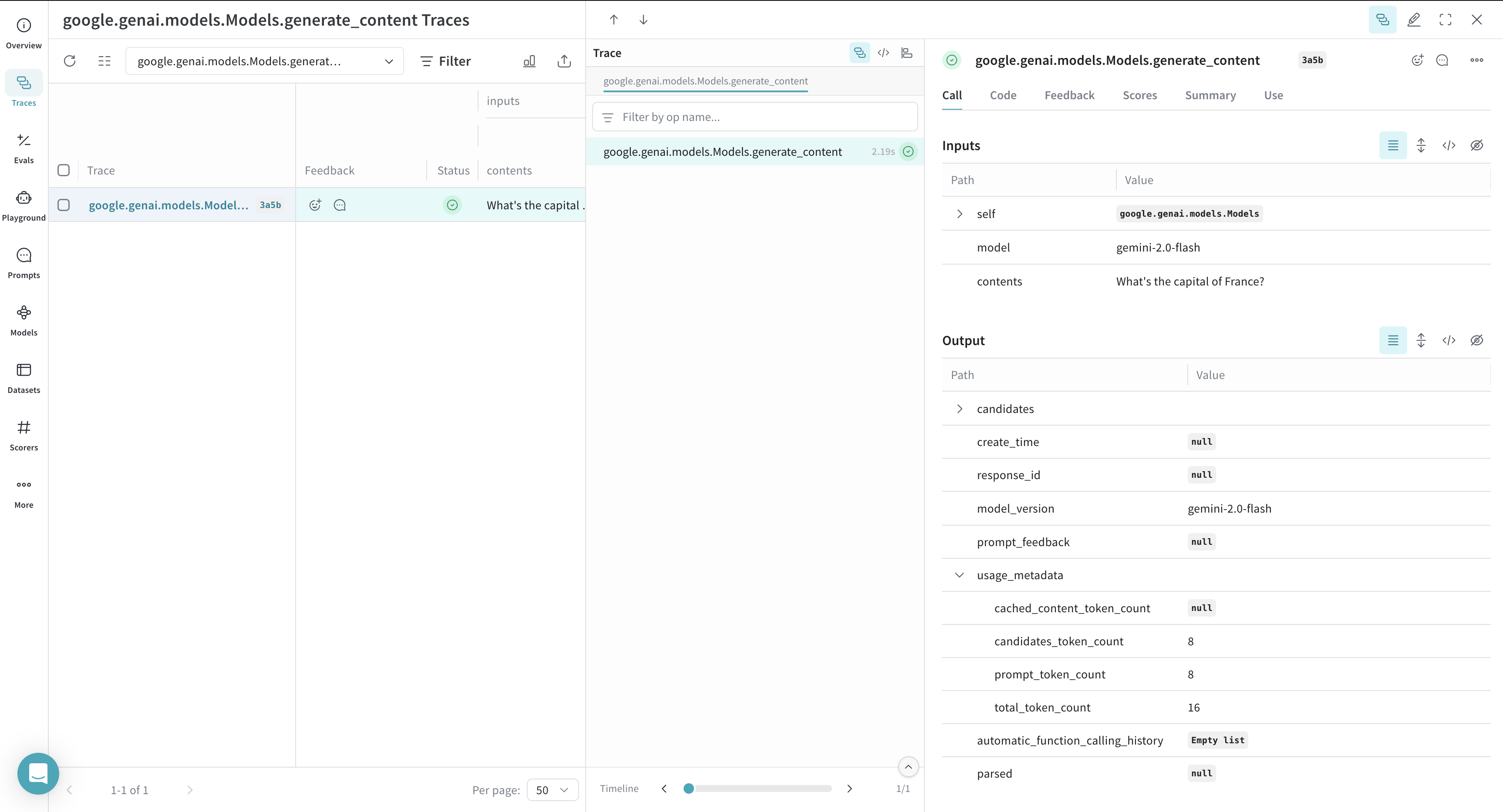

Weave will automatically capture traces for Google GenAI SDK. To start tracking, call weave.init(project_name="<YOUR-WANDB-PROJECT-NAME>") and use the library as normal. If you don't specify a W&B team when you call `weave.init()`, your default entity is used. To find or update your default entity, refer to [User Settings](https://docs.wandb.ai/guides/models/app/settings-page/user-settings/#default-team) in the W&B Models documentation..

import os

from google import genai

import weave

weave.init(project_name="google-genai")

google_client = genai.Client(api_key=os.getenv("GOOGLE_GENAI_KEY"))

response = google_client.models.generate_content(

model="gemini-2.0-flash",

contents="What's the capital of France?",

)

Weave will also automatically capture traces for Vertex APIs. To start tracking, call weave.init(project_name="<YOUR-WANDB-PROJECT-NAME>") and use the library as normal.

import vertexai

import weave

from vertexai.generative_models import GenerativeModel

weave.init(project_name="vertex-ai-test")

vertexai.init(project="<YOUR-VERTEXAIPROJECT-NAME>", location="<YOUR-VERTEXAI-PROJECT-LOCATION>")

model = GenerativeModel("gemini-1.5-flash-002")

response = model.generate_content(

"What's a good name for a flower shop specialising in selling dried flower bouquets?"

)

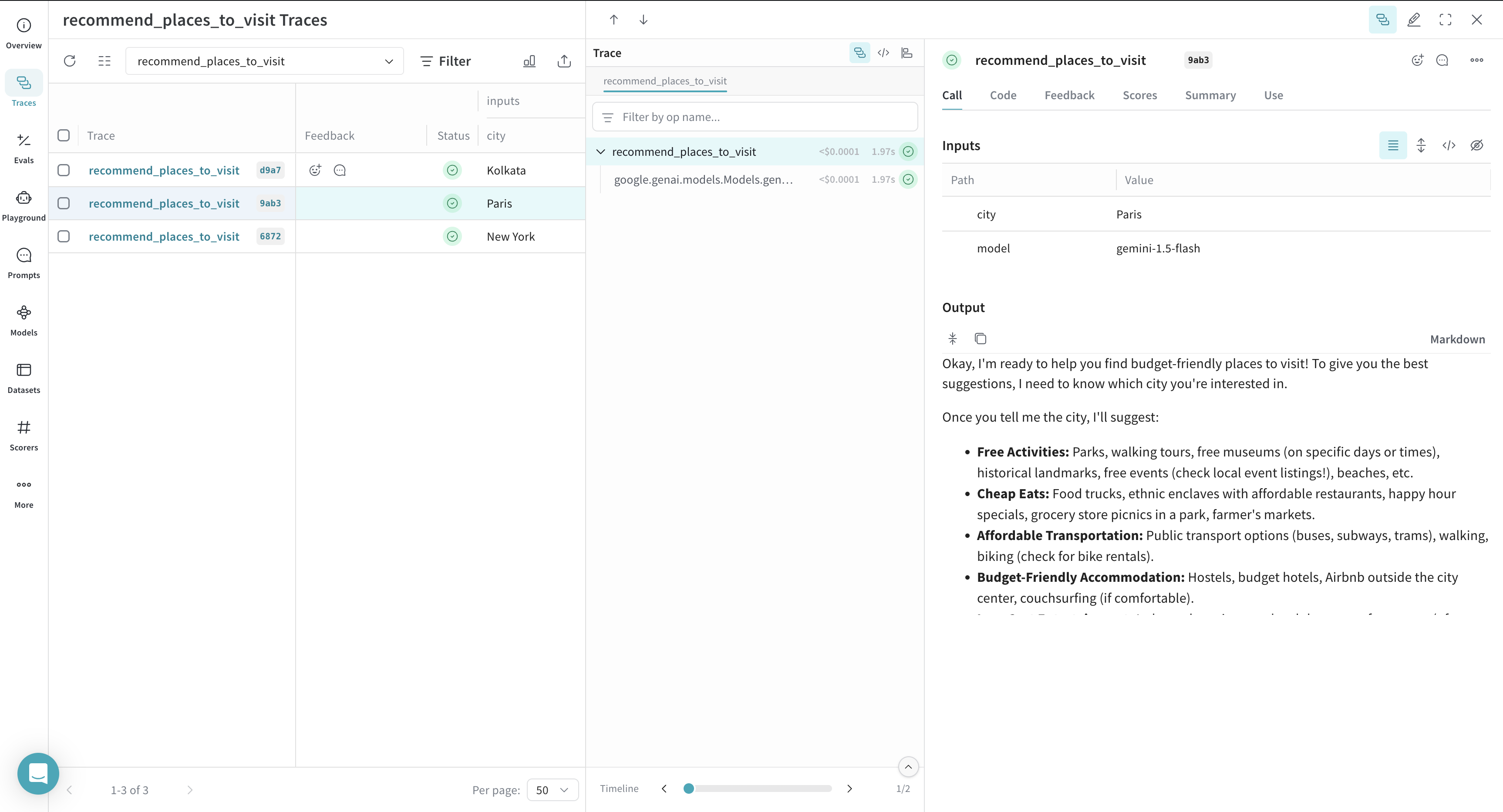

Track your own ops

Wrapping a function with @weave.op starts capturing inputs, outputs and app logic so you can debug how data flows through your app. You can deeply nest ops and build a tree of functions that you want to track. This also starts automatically versioning code as you experiment to capture ad-hoc details that haven't been committed to git.

Simply create a function decorated with @weave.op.

In the example below, we have the function recommend_places_to_visit which is a function wrapped with @weave.op that recommends places to visit in a city.

import os

from google import genai

import weave

weave.init(project_name="google-genai")

google_client = genai.Client(api_key=os.getenv("GOOGLE_GENAI_KEY"))

@weave.op()

def recommend_places_to_visit(city: str, model: str = "gemini-1.5-flash"):

response = google_client.models.generate_content(

model=model,

contents="You are a helpful assistant meant to suggest all budget-friendly places to visit in a city",

)

return response.text

recommend_places_to_visit("New York")

recommend_places_to_visit("Paris")

recommend_places_to_visit("Kolkata")

Create a Model for easier experimentation

Organizing experimentation is difficult when there are many moving pieces. By using the Model class, you can capture and organize the experimental details of your app like your system prompt or the model you're using. This helps organize and compare different iterations of your app.

In addition to versioning code and capturing inputs/outputs, Models capture structured parameters that control your application’s behavior, making it easy to find what parameters worked best. You can also use Weave Models with serve, and Evaluations.

In the example below, you can experiment with CityVisitRecommender. Every time you change one of these, you'll get a new version of CityVisitRecommender.

import os

from google import genai

import weave

weave.init(project_name="google-genai")

google_client = genai.Client(api_key=os.getenv("GOOGLE_GENAI_KEY"))

class CityVisitRecommender(weave.Model):

model: str

@weave.op()

def predict(self, city: str) -> str:

response = google_client.models.generate_content(

model=self.model,

contents="You are a helpful assistant meant to suggest all budget-friendly places to visit in a city",

)

return response.text

city_recommender = CityVisitRecommender(model="gemini-1.5-flash")

print(city_recommender.predict("New York"))

print(city_recommender.predict("San Francisco"))

print(city_recommender.predict("Los Angeles"))